Hi! Right now, even in polyphonic mode, when a key is pressed and released, the next key will “cut” its release phase. It would be nice to be able to prevent this by making the keyboard change voice at every new key.

When the keyboard is set to polyphonic, both the frequency and gate signals become polyphonic, so one key’s release shouldn’t affect the next key. Also when in polyphonic mode the gate is fixed at one, rather than being velocity sensitive. I believe that will be corrected in a future update. In the interim, I developed a patch to provide velocity when running in poly mode.

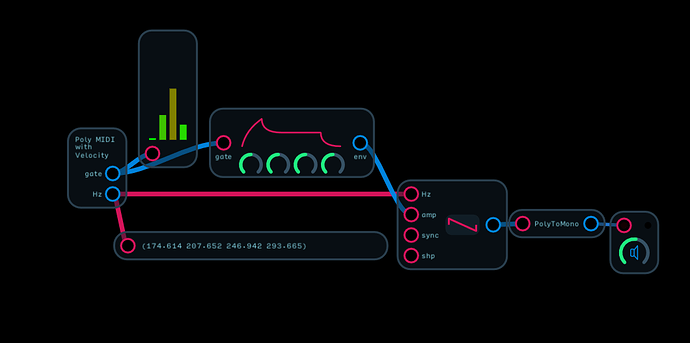

I’ve connected a meter and value node to the modified keyboard node which is set for 4 note polyphony. You can see that there are 4 gate signals and 4 frequencies. I’ve also connected an ADSR and Oscillator node. You’ll note that the PolyToMono node is placed after the Oscillator node. Because there is a quad signal on both the gate and frequency ouputs, there will actually be 4 copies of the Oscillator running which are independently controlled by the 4 copies of the ADSR, so each note has its own sustain and release. You will need to open the module if you want tot change poly mode or channel.

Polyphonic MIDI interface with Velocity Demo.audulus (10.9 KB)

BTW, since multiple copies of the oscillator and envelope control logic are needed when running in polyphonic mode, using higher values of polyphony can create a significant load on the CPU. It’s best to limit polyphony to what you will actually need, and convert back to a mono signal as early in the signal path as is practical. For example if I wanted to add a Delay node for some echo, I would place it after the PolyToMono node rather than before, to avoid having multiple copies of the Delay node running.

The problem is that the polyphonic behavior only works when the next key is pressed before the previous key is released.

For example, I downloaded your patch and did the following:

A)

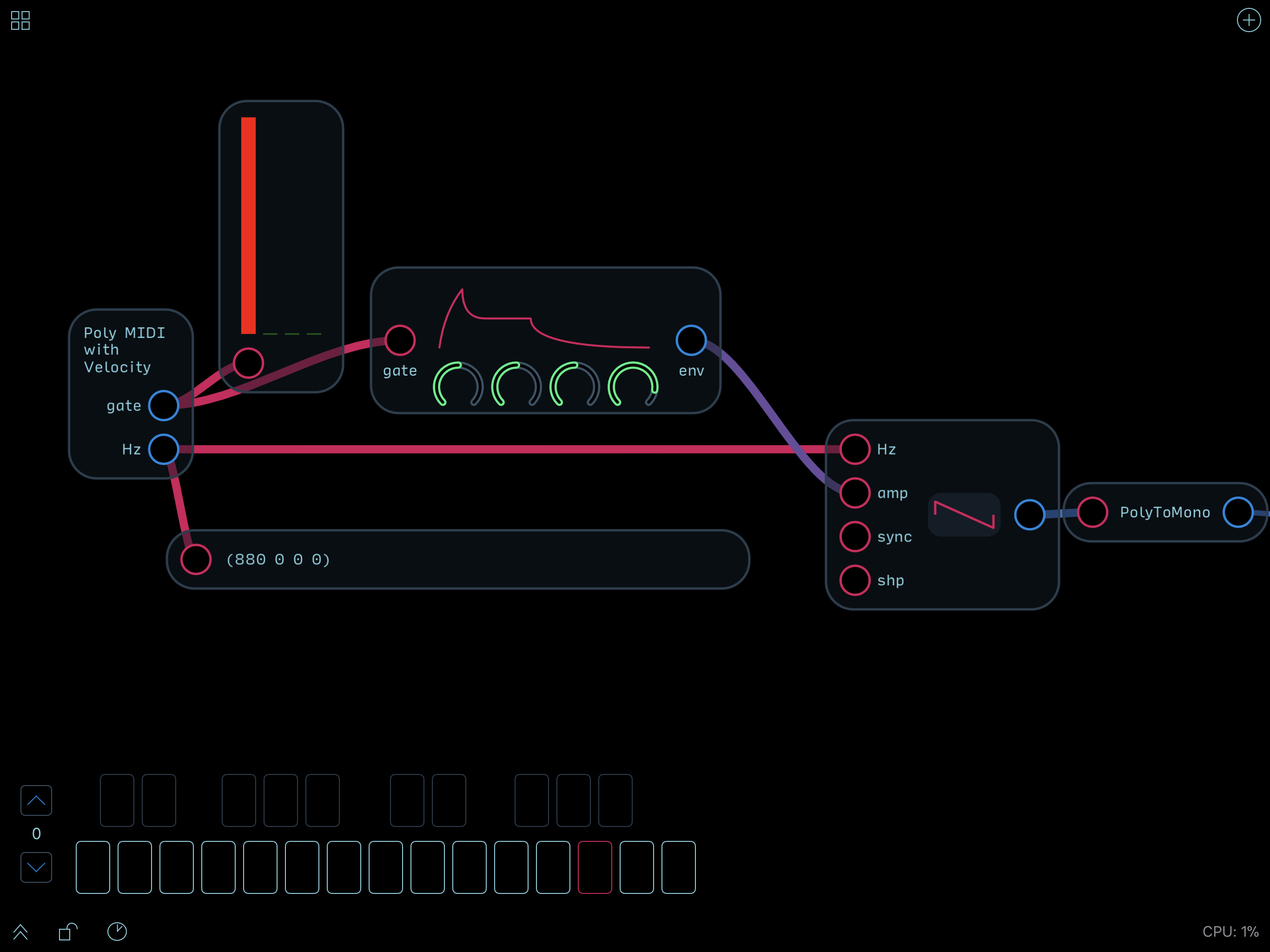

- pressed 880 Hz key:

- release the key and while the 880Hz sound was still running (because of a long release parameter), I pressed the 440Hz key:

The issue is that the 440Hz key is triggered on the same voice/oscillator than was the 880Hz key, interrupting the release phase of the 880Hz sound (producing an unwanted legato effect).

B)

Now, if I do the same thing first:

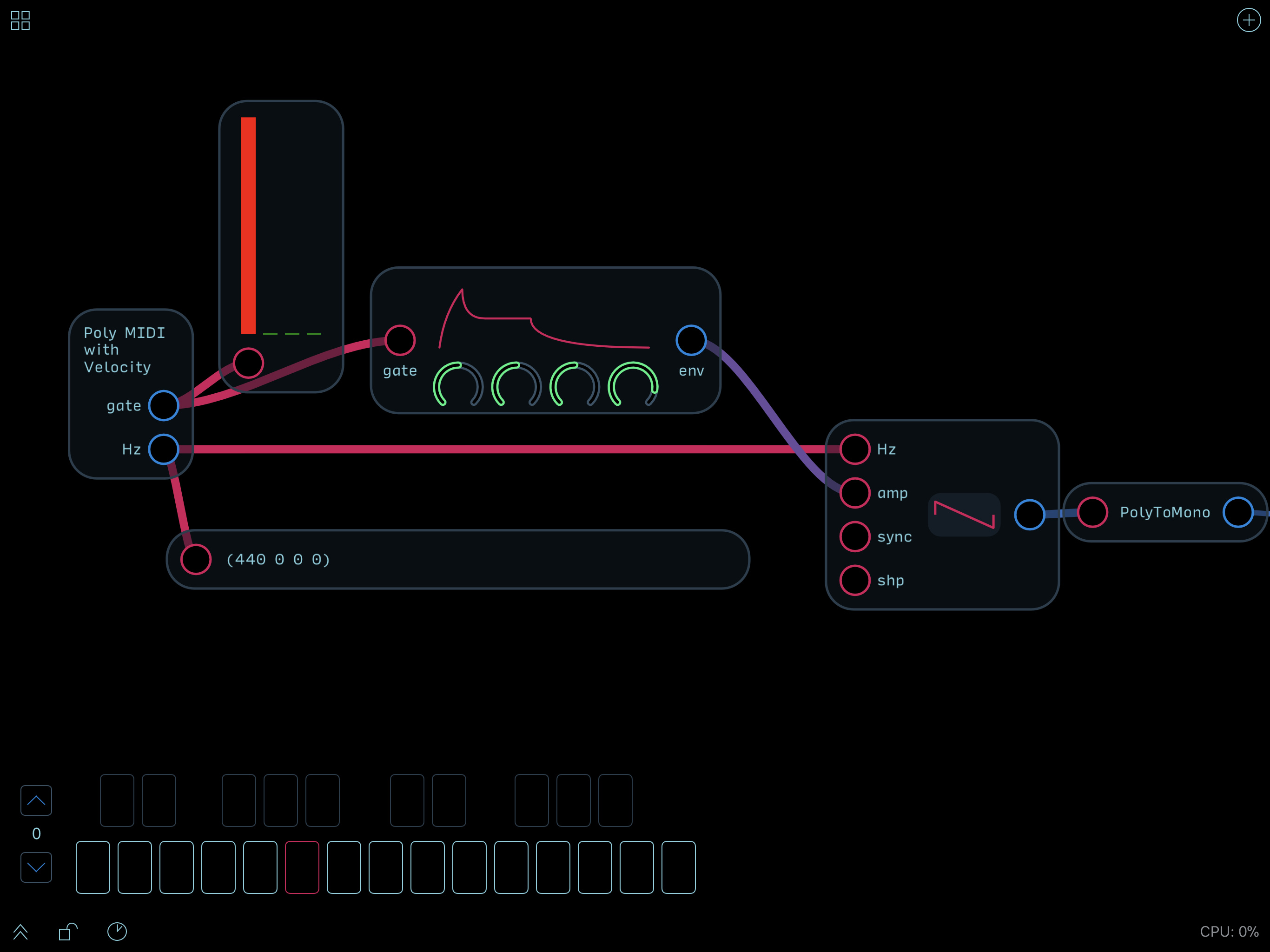

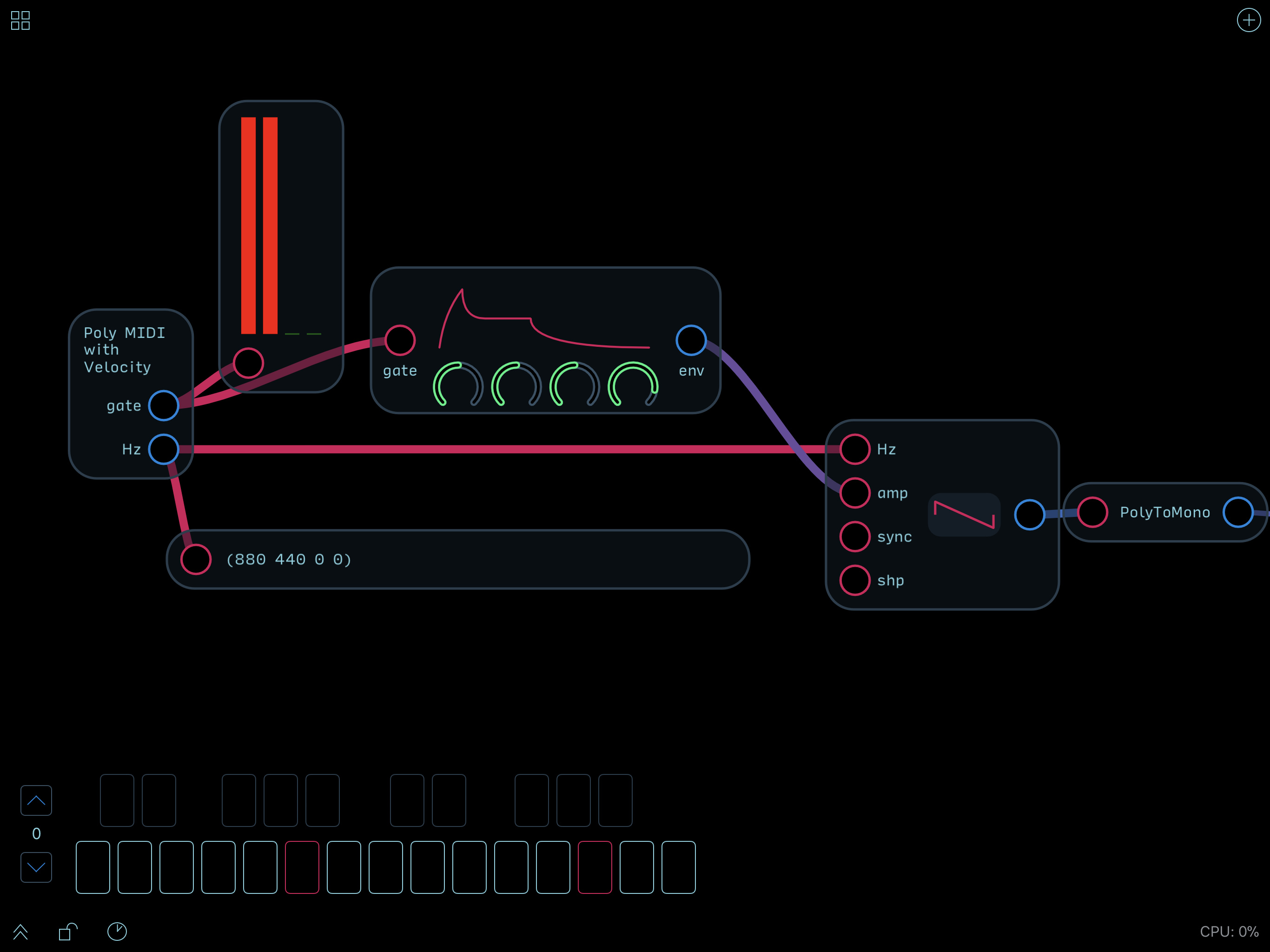

- press the 880Hz key

But then: - Without releasing the 880Hz key, press the 440Hz key.

Now the keys are pressed on different voices as they should.

It would be nice if the keyboard always behaved like in B) as opposed to A), regardless of the fact that the previous key is still being held or not. It looks like instead of automatically switching to voice 2, the keyboard checks if voice 1 is currently »available » and if so uses it, but that causes some unwanted « legato » behavior.

I see what you mean. I misunderstood your initial comment. Since the keyboard node has no way to determine if a note is still sounding, it wouldn’t be possible to use that as the basis for a channel switch. If the keyboard simply rotated the active poly channel instead of starting from the first channel each time it would reduce the issue. I ran into some similar problems building a poly keyboard latch. I could probably build something that would rotate the active channel at least for 4 note polyphony. Unfortunately there’s currently no way to break out an 8 or 16 channel signal. I’ll give it some thought.

@Mederic - Thanks for pointing this out! We have it solved in the latest beta. The way it works now is like this:

Each new note pressed gets assigned to its own poly channel. That note will remain on the same channel as long as the poly count hasn’t been exceeded (playing 5 notes with 4 polyphony). If the number of channels is exceeded, the oldest note is dropped and the new note is assigned to that channel.

The beta seems to be working well so it should be released in no time!

Glad to hear it!

Thank you very much for your replies, thx in advance for the next release, and for Audulus altogether

Actually, there is a case where that legato effect might be wanted while in poly mode: when you sweep through notes.

I think it could be done by detecting if the distance between the note on time of a key X and the note off time of key Y is under some very small threshold. If so, the « sweeping » is detected and the key X is triggered on the same channel as the key Y. Of course it would be an optional feature, as it’s not always the behavior one might want, but here is an example when it would be useful to have a more « organic » feel with an instrument:

When you play a solo synth string patch, it would allow to have sometimes glissandos like when the violinist slides along a given string, and sometimes independent notes like when the violinist switches strings to play a note, letting the first string naturally decay/release while the second string is attacking (that last behavior is not possible in legato mode).

Please add a MIDI-Out Module!

Once that happens (yes that is in the works) Audulus will be controlling all my synths

Might be fun to have arbitrary delay (z-N) with feed forward capabilities in Audulus 4.

The Moog Model 15 app for iOS allows for a QWERTY keyboard to be used as an actual keyboard to trigger the notes (as well as other app-specific shortcuts as well). Although it may not be the most requested on the list, adding keyboard functionality to be able to trigger notes in place of the onscreen keyboard as well as the ability to have shortcuts for regularly used Audulus processes I think would be extremely useful.

I have the Apple keyboard with my iPad Pro and tend to use this feature on the Model 15 app a lot when out and about. This is the only iOS synth I know that offers this feature and I don’t understand why more iOS music apps don’t implement similar features for those that don’t always have a MIDI keyboard with them but may have a Bluetooth QWERTY or the Apple keyboard.

There are a couple of limitations with the iOS implementation of keyboard support that make this a bit tricky. Only the foreground app can receive keystrokes from an external keyboard, so any kind of keyboard to MIDI translation has to be done with the translator in the foreground. There is an iOS app that does this called DoubleDecker, but you would have to background Audulus for it to work. Secondly iOS only sends keypress messages, so there is no way for an app to know when a key is released. That means you have to have a fixed note duration or have some other keystroke for note off. Still it would be useful for those who use an external keyboard with their iPad.

Interesting. That does make it a bit tricky. Thinking more about it, I think the shortcuts feature would be more useful if this was able to be done. I don’t expect a lot of priority to be given to it or for it to be done at all, but that Copy/Paste function through a keyboard would greatly streamline my patch editing on an iPad.

The infinite possibilities of touch surfaces vs the immediacy of dedicated buttons and knobs

I agree. I do almost all of my editing on the Mac because the keyboard/mouse combo is so much faster than the iPad UI. Even if you didn’t implement a virtual keybed, the ability to use keystroke based shortcuts would definitely speed things up. I’m not sure if it’s possible to capture keystrokes from an external device without invoking the virtual keyboard which you clearly wouldn’t want. I’ll have to look into it in the docs.

I really wish it was faster to edit on iOS. Would love to hear people’s ideas about this in particular if there are any. I think the new module browser made it a lot faster, but it’s still not as fast as doing it on the computer.

Maybe when we have built in motion tracking/mindreading we can do more Minority Report-like stuff.

This!

But in the meantime, bringing windows into the 20th century would be great - using the backspace or delete keys to remove nodes (they currently seem to do nothing) would really improve workflow.

Two feature requests:

- Can the Random node be changed so that each instance is given a unique seed?

Background–I recently made a tap sequence module of which I use several at a time in larger sequencer projects. I play guitar and banjo, and just wanted to have something mure interesting to practice in time than a metronome.

In the module, the steps – or just a range of steps (it’s 16-step sequencer)-- can be reset. Initially I built the sequencer to reset a step to 0, but the other day i decided to try using the reset mechanism to set steps at random, allowing values from a Random node greater than some constant to be set as a 1. I’ve been experimenting with polyrhythm, and filling the sequencers with random patterns seemed like a fun idea.

I implemeted the random feature by placing a Random node and the selection expression inside the tap sequencer module patch and creating an input node to set how dense I wanted the fills to be by setting some value between zero and one to be the reference value. The idea was that the random fill density in a multi-channel sequencer could be set by applying just a constant level to each of the tap sequence modules. For a single tap module, the random feature worked fine, but when I tried it in a multichannel sequencer, all modules always got the same pattern.

I fixed this by going into each instance of my tap sequence patch and giving it a unique seed.

As you can imagine, this is kind of tedious. Is it possible to have some unique seed be assigned by default to each instance of the Random module. If that’s not feasable, could you add a seed input, so a user could input a seed by expression, rather than by setting a property? The ideal to me would be to have both these features, in case a user occasionally wants several random nodes to issue the same values.

By the way, what algorithm is being used for the Random node? What does the output distribution look like?